By Sylvia Lorico

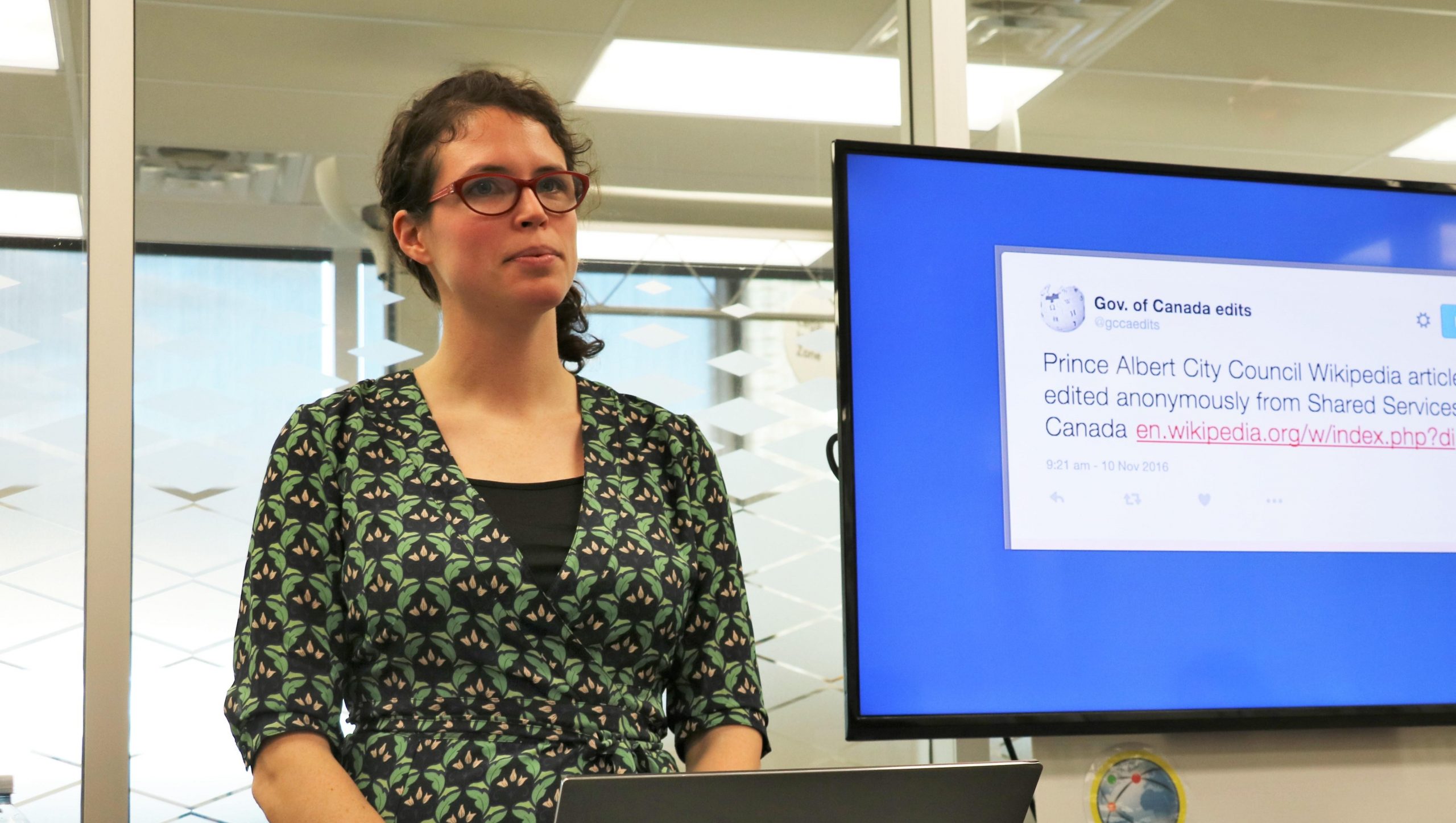

Twitter bots can make politics more transparent or spread misinformation online, but there is no force that regulates them, said Elizabeth Dubois, associate professor at the University of Ottawa. Dubois spoke about Twitter bots at Ryerson’s Social Media Lab on Friday.

A Twitter bot is an automated system that creates content or interacts with Twitter users without the help of a human being. It can comment or like tweets based on recurring words or phrases. Political Twitter bots have political leanings shown by the type of content they post or the kinds of people they follow and retweet.

A study by Philip Howard, a professor at Oxford University, showed that 32.7 per cent of pro-Trump tweets were posted by bots and 22.3 per cent of pro-Clinton tweets were posted by bots between Sept. 26, the day of the first presidential debate, and Sept. 29.

“Bots generally aren’t good or bad, but actors that are within a system of other actors,” Dubois said. “It’s the sharing of information and the relationship between different actors that make them ‘good’ or ‘bad.’”

Dubois focused her research on transparency bots, which tweet out the IP addresses that make edits on certain pages. She looked at Wikipedia edit bots that tweet Wikipedia edits made from Canadian government IP addresses.

She said that initially, these bots helped make information transparent and accessible to people. They allowed journalists to verify information by revealing the source that edited a page.

“Very few of us go to a particular government site to look for information; we either Google it or we go to Wikipedia first,” Dubois said.

Questionable edits made from government IP addresses were highlighted by the media. Some edits were questioned because they removed information about political persons. Other edits, like an edit to a page about Syrian refugees which displayed profanity on the site, were examples of vandalism on the Wikipedia page.

This caused people to hide their IP addresses to make edits, thereby reducing the number of edits and negatively impacting people’s perception of political Twitter bots.

Political Twitter bots serve many different purposes. Some are used to increase the number of followers on a political account while others can be used to parody a political figure or idea.

According to Dubois, it is not difficult for someone to create a political bot. She said there are even advertisements online for how to create one.

Dubois said there are some ethical problems with bots. There is no real way to monitor which bots function to deceive rather than help people. Bots can change their behaviours as a result of changes in politics so it’s difficult to track them or set ethical standards for them.

“The internet likes to break things,” she said. “For example, Microsoft created a bot called ‘Tay’ to converse with other people. It started out really good but really quickly, Tay became a Nazi.”

Bradly Dahdaly, a research assistant at the Social Media Lab in Ryerson, thought that while political bots do cause some problems, they don’t need to be regulated.

“Once a bot is out there, it’s for the public. Bots can sometimes flood people with misconceptions because of their political leanings, but it’s a freedom of speech thing.”

Leave a Reply