By Vihaan Bhatnagar

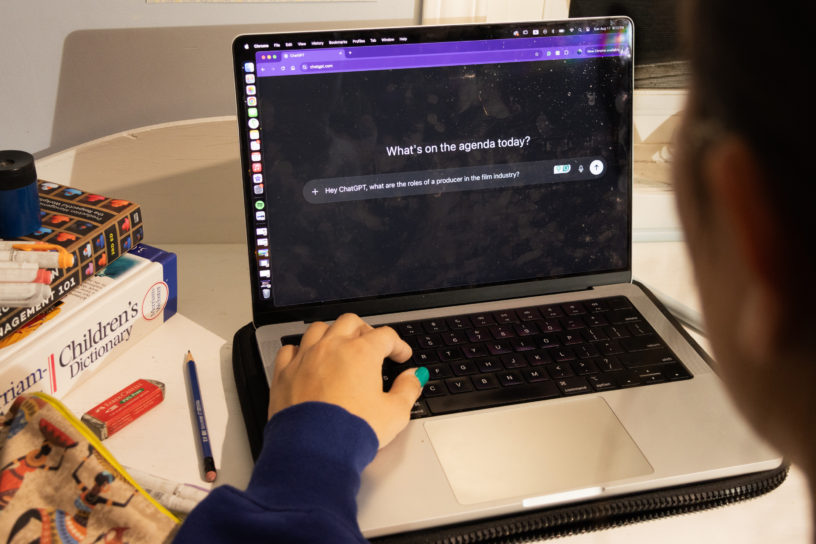

AI is being hailed as a powerful teaching tool, with some studies suggesting it can help them learn faster than traditional teaching. A World Bank Study released in May found that students made substantially higher learning gains when they were supported by artificial intelligence (AI).

The study involved six weeks of daily sessions with Microsoft Copilot, a Large Learning Model (LLM) type AI that operates on ChatGPT-4. The study reported that the gains equal a year and a half to two years of traditional schooling.

Closer to home, some instructors at Toronto Metropolitan University (TMU) have already started weaving AI into their work but only within TMU’s Policy 60, which prohibits the usage of AI by students unless explicitly stated by the instructor.

According to the latest Canadian Student Wellbeing Survey, conducted by YouGov for Studiousity, an academic support provider, 78 per cent of Canadian students surveyed said they had used AI to study before.

Nagina Parmar, an adjunct professor at TMU, is among the educators who allow the usage of AI in their classes.

Parmar, who primarily teaches medical microbiology in the biomedical sciences program, said she implements specific rubrics for students who choose to use AI and requires them to disclose when AI has been used. In a science and policy development class Parmar has taught, students had the option of using AI to assist with role-play exercises.

TMU’s Academic Integrity Office and the Centre for Excellence in Learning and Teaching (CELT) expressed that the use of AI is always at the instructor’s discretion. They added that “whatever the acceptable use parameters are…should be communicated to students clearly in a syllabus or other course policy statement.”

She encourages the use of AI as a tool but she doesn’t allow students to use AI when assignments require critical thinking.

“For the past five or six years, my lectures have been mostly [problem-based learning], where students have to do critical thinking,” said Parmar. “Not necessarily just…go to ChatGPT and search the answers, because the cases we studied in the classroom…you have to know the basics as well.”

In one of her classes, Parmar has her students analyze the structure of specific drugs and then talk about it in their own words—an activity in which she said the use of AI would be obvious to her. In this case, the use of AI would be considered a breach of Policy 60, according to Parmar.

Parmar said there are both pros and cons to using AI but cautioned that students who lean on it will “become more reliant, totally dependent on that sort of problem solving and hinder the development of critical thinking as well.”

She added the concern is greatest when they move on to working professionally and have to craft their own emails and such.

Karim Butt, a fall 2024 graduate from the performance acting program at TMU, said he has used AI to help with schoolwork. He explained that he didn’t plagiarize—only using AI to help him look for sources for an assignment.

According to Butt, using AI for art is really frowned upon in the arts community but many of his peers still use the tool for assistance with schoolwork.

“AI has kind of made learning a bit lazier,” Butt said. “I think it’s a bit dangerous because if AI can teach you everything…why do we have institutions? Why do we have schools?”

He said he doesn’t know enough about professors who use AI but he is against professors handing over all their responsibilities to an AI tool. “Teaching is best done from human to human,” he said.

Parmar echoed Butt’s point, saying students “need that human connection.” She emphasized that even as companies adopt AI within ethical limits, professionals still interact directly with patients, which keeps human communications essential.

Butt warned that AI “could be the downfall of education,” arguing that the reliance on the technology risks undermining the purpose of schools and professors by introducing an unnecessary middleman.

“If my students are not coming to the class and are not engaged, that means whether I use AI or not, or encourage the use of AI—it doesn’t make any difference,” said Parmar.

Leave a Reply